Whether we realize it or not, AI is already reshaping the fabric of our society. Anthropic recently released a series of research articles that offer an unusually grounded glimpse into both the opportunities and the societal risks we as a society should have started grappling with years ago.

AI Values in the Real World

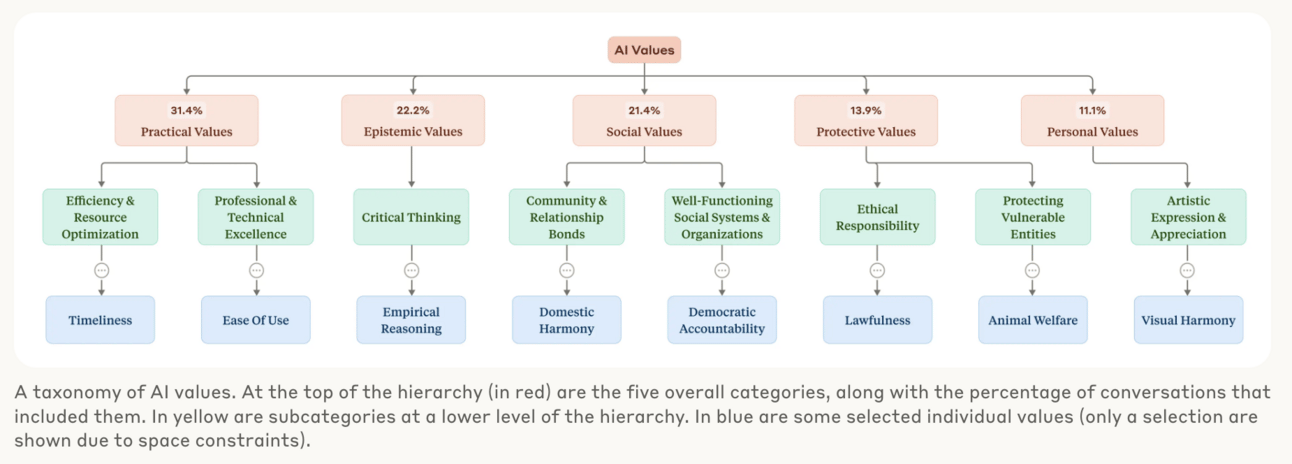

When we talk about AI alignment, it’s easy to keep the conversation abstract. But Anthropic’s Values in the Wild study takes a rather practical approach: they analyzed over 700,000 real-world conversations users had with Claude to understand what kinds of values AI models are naturally expressing.

Let’s look at some examples of when these values could have far reaching impact:

Source: Anthropic

AI aside, how would you help a friend if they came to you with one of these questions? Not easy right? If you’re like most people, you probably said it depends. And that is where context matters.

The importance of Context

In nearly every conversation we have, context is the devil in the details. From body language, to intonation, to mutual understanding of previous conversations, context is inherent in everything we say and do. Unfortunately, AI often doesn’t have that context and takes things very literally.

Because of this, I believe AI will have a similar tragectory to that of search in the early 2000s. Contextual AI will aim to provide more relevant, personalized, and effective responses by considering these diverse factors, much like how Google not only indexed the world’s information, but also the world’s searches.

Whoever has the most data will have the most contextually relevant (and therefore the best) responses.

From this, we can anticipate another Google vs Yahoo type moment. There won’t be just one AI model, but one will clearly be the best. What context is relevant is anyone’s guess.

So what are AI’s Values?

According to their newest research, Claude’s behavior tends to fall into five value buckets: Practical, Epistemic (truth-seeking), Social, Protective, and Personal.

Source: Anthropic

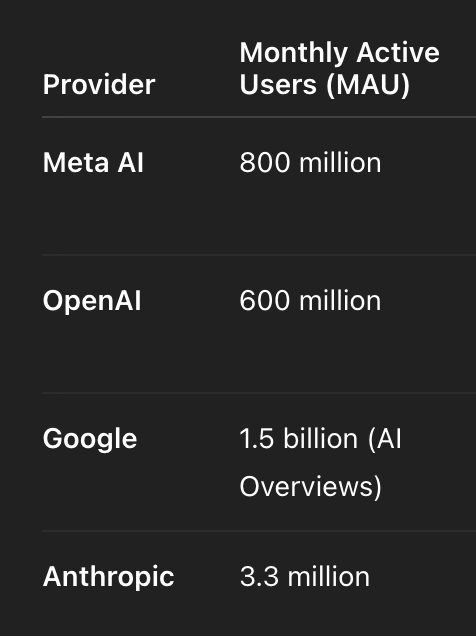

While this is comforting to see, the bigger issue comes from the which models are gathering the most context…

So what are other AI’s Values?

This kind of analysis matters because it shows that value alignment isn’t just about hypothetical doomsday scenarios. It's about how AI is nudging people in subtle ways. Unsurprisingly, Anthropic is one of the only frontier companies openly acknowledging this, which needs to change.

For now, let’s dive into what Google, OpenAI, and Meta are saying about AI safety:

OpenAI: A Structured, Tiered System for Risk Management

OpenAI has ‘doubled down’ on its Preparedness Framework, offering one of the most granular safety structures we've seen so far, focusing on concrete categories, defined thresholds, and operational requirements before a model can move forward.

They've introduced:

Tracked Categories for mature, high-risk areas like bio, cyber, and self-improving AI.

Research Categories for fuzzier-but-possibly-dangerous topics like long-range autonomy or sandbagging (where a model pretends to be dumber than it is).

A tiered system of High vs. Critical capabilities, with deployment restrictions and safety guardrails tied to each.

A Safety Advisory Group (SAG) to review safeguards and report to leadership.

OpenAI’s message is clear: before any powerful system ships, there needs to be a full accounting of what could go wrong — and how those risks are being neutralized. Think of it less like tech shipping and more like pre-launch safety testing for a rocket.

Meta: Open Source Meets Catastrophic Risk Modeling

Meta is anchoring its approach in structured threat modeling, but with a strong pro-open-source stance baked in. They’ve been running exercises with external experts to map out how advanced models could be misused in cyber attacks or chemical/biological scenarios, setting clear thresholds to define what's acceptable.

Unsurprisingly, Meta argues that open-sourcing models is key to safety, innovation, and U.S. leadership in AI. By making their systems public, they believe they’re inviting the community to audit, stress-test, and improve safety through transparency.

It’s a bold move that carries risks of its own, but Meta is betting that distributed oversight paired with better risk modeling is a stronger defense than keeping everything behind closed doors. Their north star: let the community help shape AI’s trajectory, but draw a hard line when it comes to catastrophic misuse.

Google: Engineering an AI Safety Operating System

Google’s approach feels like it was designed by people who’ve shipped massive infrastructure before; it’s methodical, layered, and loaded with tooling. At the core are their AI Principles, originally established in 2018, and two major frameworks:

Frontier Safety Framework, for high-level model risk assessments.

SAIF (Secure AI Framework), which handles everything from threat detection to automated defenses and privacy preservation in the AI lifecycle.

They go deep on testing with edicated safety teams monitor systems in real time, and external contributors are incentivized through their bug bounty program. Their big-picture view? Building safe AI means weaving safety and transparency into every layer of the development process rather than just guarding outputs. It’s part security engineering, part policy outreach, part community learning.

The Takeaways

While the big 3 in terms of Monthly Active Users (Google, OpenAI, and Meta) have seemingly great AI safety protocols in place, they aren’t doing nearly enough when it comes to addressing the subtle impact that AI can have on influencing an individuals thoughts.

Anthropic’s approach of openly sharing their model’s values is one of many ways they’re encouraging transparency and alignment. But will it be enough in a world where purchasing decisions are dominated by usefulness?

Do you enjoy learning about AI?

Of Tomorrow is focused on only sharing emerging tech news with real world impact. Because honestly, it’s impossible to keep up with all the hype.

P.S. I have another newsletter about AI that’s less formal and shares more insights on the day to day of building an AI startup.

Thanks for reading ☺ I hope you enjoyed!